Home » Posts tagged 'Oklahoma'

Tag Archives: Oklahoma

Thoughts from an Agronomist- 1 Management of the Primordia

Josh Lofton, Cropping Systems Specialist

Many crop management recommendations emphasize actions that must be taken well before a crop reaches what we often call “critical growth stages.” Management this early can seem counterintuitive when the crop still looks small, healthy, or unchanged aboveground. However, much of a crop’s yield potential is determined early in the season at a level we cannot see in the field. Long before flowers, tassels, or heads (or any reproductive structure) appear, the plant is already making developmental decisions that shape its final yield potential. Understanding this “behind the scenes” process helps explain why timely, early-season management is often more effective than trying to correct problems later.

At the center of this process is the shoot apical meristem, commonly referred to as the growing point. This tissue produces leaf and reproductive primordia, which are the earliest developmental stages of future everything in the plant. These primordia form well before the corresponding plant parts are visible. Once these structures initiate—or if they fail to begin due to stress—the outcome is permanent. The plant cannot later in the season go back and recreate leaf number, leaf size, or reproductive capacity. As a result, early environmental conditions and management decisions play a disproportionate role in determining yield potential.

Corn is a good example of how early development influences final yield. By the time corn reaches the V4 growth stage, the plant only has four visible leaves with collars, yet internally it is far more advanced. Most of the total leaf primordia that will eventually form the full canopy have already begun, and the potential size of the ear is starting to be established. During this stage, the growing point is still below the soil surface and somewhat protected from some stressors but highly susceptible to others. Nitrogen deficiency, cold temperatures, moisture stress, compaction, or herbicide injury at or before V4 can reduce leaf number and limit leaf expansion. Even if growing conditions improve later, the plant cannot replace leaf primordia that were never formed, which reduces its ability to intercept sunlight and support high yields.

As corn approaches tasseling (VT), the crop enters a stage that is visually and physiologically important. Pollination, fertilization, and early kernel development occur at this time, and stress can have a critical impact on kernel set. However, by VT, the plant has already completed leaf formation, and much of the ear size potential has already been determined several growth stages earlier. Management at VT is therefore focused on protecting yield rather than creating it. Late-season nutrient applications may improve plant appearance or maintain green leaf area, but they cannot increase leaf number or rebuild ear potential lost due to early-season stress. This distinction helps explain why some late inputs show limited yield response even when the crop looks responsive.

Grain sorghum provides another clear example of why early management is emphasized. Although sorghum often grows slowly early in the season and may appear unimportant during the first few weeks after emergence, the first 30 days are among the most critical periods in its development. During this time, the growing point is actively producing leaf primordia and transitioning from vegetative growth toward reproductive development. Head size potential is primarily established during this early window, and the plant’s capacity to support tillers is influenced by early nutrient availability and moisture conditions. Stress from nitrogen deficiency, drought, weed competition, or restricted rooting during the first 30 days can reduce head size and kernel number long before visible symptoms appear.

Once sorghum reaches later vegetative and reproductive stages, much like corn at VT, management shifts from building yield potential to protecting what has already been determined. Improving conditions later in the season can help maintain plant health and grain fill, but it cannot fully compensate for early limitations imposed at the primordial level. This is why early fertility placement, timely weed control, and moisture conservation are consistently emphasized in sorghum production systems.

Across crops, a typical pattern emerges: the growth stages we observe in the field often reflect decisions the plant made weeks earlier. When agronomists stress early-season management, they are responding to plant biology rather than simply following tradition. By the time visible “critical stages” arrive, the plant has already established many of the components that define yield potential.

The key takeaway is that effective crop management must be proactive rather than reactive. Early-season decisions support the crop while it is still determining how many leaves it can produce, how large its reproductive structures can become, and how much yield it can ultimately support. Waiting until stress becomes visible often means responding after the plant has already adjusted its potential downward. Recognizing what is happening at the primordial level helps explain why management ahead of critical stages consistently delivers the greatest return, even when the crop appears small and unaffected aboveground.

For questions or comments reach out to Dr. Josh Lofton

josh.lofton@okstate.edu

One Well-Timed Shot: Rethinking Split Nitrogen Applications in Wheat production

Brian Arnall, Precision Nutrient Management Specialist

Samson Abiola, PNM Ph.D. Student.

Nitrogen is the most yield limiting nutrient in wheat production, but it’s also the most unpredictable. Apply it too early, and you risk losing it to leaching or volatilization before your crop can use it. Apply it too late, and your wheat has already determined its yield potential; you’re just feeding protein at that point. For decades, the conventional wisdom has been to split nitrogen applications: put some down early to get the crop going, then come back later to apply again. But does splitting actually work? And more importantly, when is the optimal window to apply nitrogen if you want to maximize both yield and protein quality? We spent three years across different Oklahoma locations testing every timing scenario to answer these questions.

How We Tested Every Nitrogen Timing Scenario in Oklahoma Wheat

Between 2018 to 2021, we conducted field trials at three Oklahoma locations, including Perkins, Lake Carl Blackwell, and Chickasha, representing different soil types and growing conditions across the state. We tested three nitrogen rates: 0, 90, and 180 lbs N/ac, applied as urea at five critical growth stages based on growing degree days (GDD). These timings were 0 GDD (preplant, before green-up), 30 GDD (early tillering), 60 GDD (active tillering), 90 GDD (late tillering, approximately Feekes 5-6), and 120 GDD (stem elongation, approaching jointing). We also compared single applications at each timing against split applications, where half the nitrogen (45 lbs N ac-1) went down preplant, and the other half was applied in-season (45 lbs N ac-1).

The Sweet Spot: Yield and Protein at the 90 lbs N/ac Rate

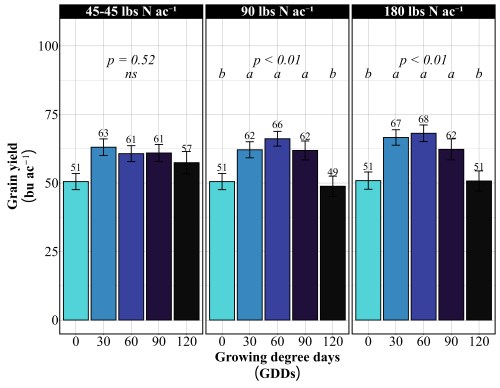

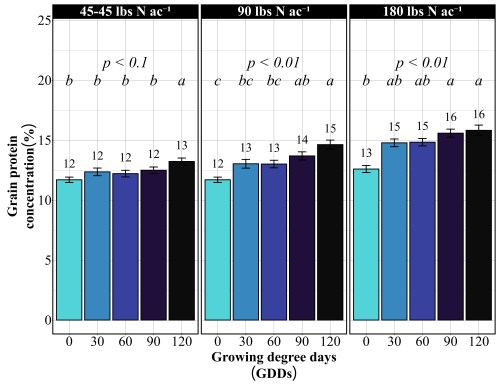

Across all site-years, at the 90 lbs N/ac rate, timing had a significant impact on both yield and protein. The highest yields came from the 30 and 90 GDD timings, producing 62 to 66 bu/ac, with 60 GDD reaching the peak (Figure 1). Protein at these early timings stayed relatively modest at 13%. The 90 GDD timing delivered 62 bu/ac with 14% protein matching the yield of the 30 GDD application but pushing protein a percentage higher (Figure 2). The real problem appeared at 120 GDD. Delaying application until stem elongation dropped yields to just 49 bu/ac, even though protein climbed to 15%. That’s a 13 bushel penalty compared to the 90 GDD timing. At current wheat prices per bushel, that late application may cost farmers over $100 per acre in lost revenue. By 120 GDD, the crop has already determined its yield potential tillers are set, head numbers are locked in and nitrogen applied at this stage can only be directed toward protein synthesis, not building more yield components.

More Nitrogen Does not lead to high yield

Doubling the nitrogen rate to 180 lbs N/ac revealed something critical, more nitrogen doesn’t mean more yield. The yield pattern remained nearly identical to the 90 lbs N/ac rate. The 60 GDD timing produced the highest yield at 68 bu/ac, followed closely by 30 GDD at 67 bu/ac. The 90 GDD timing yielded 62 bu/ac, and the 120 GDD timing again crashed to 51 bu/ac. The only difference between the two rates was protein concentration (Figure 2). At 180 lbs N/ac, protein levels increased across all timings: 13% at preplant, 15% at both 30 and 60 GDD, 15-16% at 90 GDD, and 16% at 120 GDD. This confirms a fundamental principle: once farmers supply enough nitrogen to maximize yield potential, which occurred at 90 lbs N/ac in these trials, additional nitrogen only increases grain protein. It does not build more bushels. Unless farmers are receiving premium payments for high-protein wheat, that extra 90 lbs of nitrogen represents a cost with no yield return.

Should farmers split their nitrogen application?

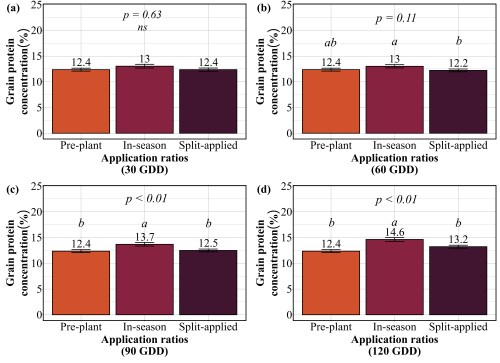

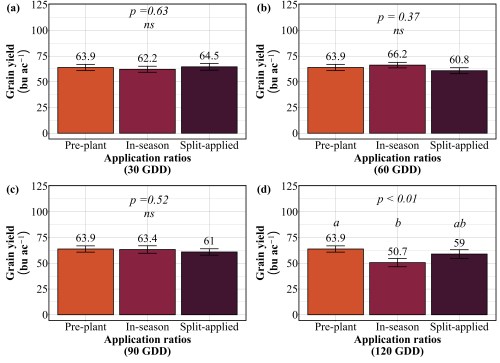

Now that timing has been established as critical, the next question becomes: should farmers split their nitrogen applications, or is a single application sufficient? The conventional recommendation has been to split nitrogen apply part preplant to support early growth and tillering, then return with a second application later in the season to boost protein and finish the crop. But does the data support this practice? We compared three strategies at each timing: applying all nitrogen preplant, applying all nitrogen in-season at the target timing, or splitting nitrogen equally between preplant and in-season timing. The goal was to determine whether the extra trip across the field will deliver better results.

Our findings revealed that splitting provided no consistent advantage. At 30 GDD, all three strategies preplant, in-season, and split performed identically, producing 62-65 bu/ac with 12-13% protein (Figure 3 and 4). No statistical differences existed among them. At 60 GDD, similar pattern was held. Yields ranged from 61 to 66 bu/ac and protein stayed at 12-13% regardless of whether farmers applied all nitrogen preplant, all at 60 GDD, or split between the two. At 90 GDD, the single in-season application actually outperformed the split. While yields remained similar across all three methods (61-64 bu/ac), the in-season application delivered significantly higher protein at 13.7% compared to 12.4% for preplant and 12.5% for split applications. This suggests that concentrating nitrogen at 90 GDD, rather than diluting it across two applications, allows more efficient incorporation into grain protein. The only timing where splits appeared beneficial was 120 GDD, where the split application yielded 59 bu/ac compared to 51 bu/ac for the single late application. But this is not a win for splitting, it simply demonstrates that applying all nitrogen at 120 GDD is too late and putting half down earlier salvages some of the yield loss. Across all timings tested, splitting nitrogen into two applications offered no agronomic advantage over a single well-timed application, meaning farmers are making an extra pass for no gain in yield or protein.

Practical Recommendations for Nitrogen Management

Based on three years of field data, farmers should target the 90 GDD timing (late tillering, Feekes 5-6) for their main nitrogen application to achieve the best balance between yield and protein. This window typically falls in late February to early March in Oklahoma, though farmers should monitor crop development rather than relying solely on the calendar apply when wheat shows multiple tillers, good green color, and vigorous growth. A rate of 90 lbs N/ac maximized yield in these trials; higher rates only increased protein without adding bushels, so farmers should only exceed this rate if receiving premium payments for high-protein wheat. Splitting nitrogen applications provided no advantage at any timing, meaning a single well-timed application at 90 GDD is sufficient for most Oklahoma wheat production systems. The exception would be sandy soils with high leaching potential, where splitting may reduce nitrogen loss. Farmers should avoid delaying applications until 120 GDD or later, as this timing consistently resulted in 15-25 bushel per acre yield losses even though protein increased. For farmers specifically targeting premium protein markets, a two-step strategy works best: apply 90 lbs N/ac at 90 GDD to establish yield potential and baseline protein, then follow with a foliar application of 20-30 lbs N/ac at flowering to push protein above 14% without sacrificing yield. Finally, weather conditions matter hot, dry forecasts increase volatilization risk and reduce uptake efficiency, so farmers should consider moving applications earlier if low humidity conditions are expected.

Split Application Caveat * Note from Arnall.

The caveat to the it only takes one pass, is high yielding >85+ bpa, environments. In these situation I still have not found any value for preplant nitrogen application. I have seen however a split spring application is valuable. Basically putting on 30-50 lbs at green-up, with the rest following at jointing (hollowstem). The method tends to reduce lodging in the high yielding environments.

This work was published in Front Plant Sci. 2025 Nov 6;16:1698494. doi: 10.3389/fpls.2025.1698494

Split nitrogen applications provide no benefit over a single well timed application in rainfed winter wheat

Another reason to N-Rich Strip.

Yet just one more data set showing the value of in-season nitrogen and why the N-Rich Strip concept works so well.

Questions or comments please feel free to reach out.

Brian Arnall b.arnall@okstate.edu

Acknowledgements:

Oklahoma Wheat Commission and Oklahoma Fertilizer Checkoff for Funding.

Using soil moisture trend values from moisture sensors for irrigation decisions

Sumit Sharma, Extension Specialist for High Plains Irrigation and Water Management

Kevin Wagner, Director, Oklahoma Water Resources Center

Sumon Datta, Irrigation Engineer, BAE.

Sensor based and data driven irrigation scheduling has gained interest in irrigated agriculture around the world, especially in semi-arid areas because of the easy availability of commercial irrigation scheduler technology such as soil moisture sensors and crop models. Moisture sensing has particularly gained interest among the agriculture community due to ease of availability of the sensors to the producers, affordable costs, and easy to use graphical user interface. Economic potential of sensors in saving irrigation costs, data interpretation training through extension education programs, and policy initiatives have also helped with adoption of the sensors, especially in the United States. However, sensor adoption and efficient use can still be challenging due to poor data interpretation, steep learning curves, overly high expectations and subscription costs. This blog briefly discusses scenarios where sensors can be helpful in irrigated agriculture. For moisture sensor types, functioning and installation, readers are referred to BAE-1543 OSU extension factsheet.

Irrigation Scheduling

Irrigation scheduling with soil moisture sensors follows traditional principles of field capacity (FC), plant available water, maximum allowable depletion (MAD), and permanent wilting point (PWP). Figure 1 shows the transition of soil moisture level from field capacity to MAD, and to permanent wilting point in a typical soil. The maximum amount of water that a soil can hold after draining the excess moisture is called field capacity. At this point, all the water in soil is available to the plants. As the moisture content in the soil declines, it becomes more difficult for the plants to extract moisture from the soil. The soil moisture level below which the available moisture in soil cannot meet the plant’s water requirement is called the MAD. The water stress that occurs once moisture level goes below this moisture level can cause yield reductions in crops. Therefore, irrigation should be triggered as soon as the soil moisture level approaches this point (MAD) to avoid any yield losses (for detailed information on MAD, its value for different soils and crops, and irrigation scheduling, readers are referred to BAE-1537). Modern soil moisture sensors can come self-calibrated and are equipped with water stress threshold levels for different crops to avoid water stress or overwatering (Figure 2). These decisions are useful in furrow and drip irrigation systems where irrigation triggers can be synchronized with MAD values.

Figure 2: Screenshots of graphic user interface of three sensors a) GroGuru b) Sentek c) Aquaspy (Top to bottom) with threshold levels for soil moisture conditions. Aquspy and Sentek credits: Sumit Sharma. GroGuru image credits: groguru.com

Soil Moisture Trends and Irrigation Depths

Soil moisture sensors can help make data-informed decisions about scheduling irrigation. Previous studies have shown that the moisture values may vary from one sensor to the other and may not represent the exact moisture levels in soil. However, all soil moisture sensors exhibit trends in recharge and decline in soil moisture conditions. These real time soil moisture trends can be used to make informed decisions to adjust irrigation and improve water use efficiency. In high ET demand environments of Oklahoma, pivots are usually not turned off during the peak growing season, yet sensors can help in making decisions for early as well as late growing periods.

One of the easiest adjustments that could be made using soil moisture sensor data is the adjustment of irrigation depth. In an ideal situation, every irrigation event should recharge the soil profile to field capacity; however, this is often limited by the crops’ water demand and the well/irrigation capacity to replenish soil moisture levels. Each peak in soil moisture detected by sensors shows irrigation or rain, which ideally should be bringing moisture to same level after irrigation. However, reduction in moisture peaks in the soil moisture profile with every irrigation often indicates greater crop water demand than what is replenished with irrigation. In such scenarios, as allowed by capacity and infiltration rates, the irrigation depth can be increased. These trend values are particularly useful for center pivot irrigation systems, where triggering irrigation based on MAD might lag due to time and space bound rotations of the pivots in Oklahoma weather conditions.

Figure 3: A screenshot from Aquaspy agspy moisture sensor showing moisture at 8” (blue) and 28” (red) with each irrigation event. Data and image credit: Sumit Sharma

Last irrigation can be a tricky decision to end the cropping season. For summer crops, this is the time when crop ET demand is declining due to decline in green biomass and cooler weather patterns. Similar moisture trends can be used to make decisions for the last irrigation events, which can be skipped or reduced if the profile moisture is good, or can be provided if profile moisture is low. This is important because in an ideal situation, one would want to end the season with a relatively drier profile to capture and store off-season rains. Additionally, saving water on last irrigation can save operational cost and potentially cover the cost of moisture sensor subscriptions.

These decisions can be illustrated with Figure 3, which shows the trends of declining and recharging in a soil profile under corn at 8- and 28-inch depth. This field was irrigated with a center pivot irrigation system which was putting 1-1.25 inches of water with each irrigation event; however, the peak water recharge rate at both depths was declining with each irrigation. This coincided with peak growth period indicating rising ET demand of the crop than what was replenished by the irrigation. Later, two rain events, in addition to irrigation, replenished soil moisture in both layers. As the pivot was already running at a slow speed, slowing it further was not an option without triggering runoff for this soil type and this well capacity. Further in the season, when the crop started to senesce and ET demand declined, each irrigation event added to the moisture level of the soil. This allowed the producer to shut down the pivot between 70% starch line and physiological maturity for the crop to sustain at a relatively wet soil profile and leave the soil in relatively drier profile for the off-season.

In high ET demanding conditions of Western Oklahoma, crops often rely on moisture stored in deep soil profiles during the peak ET period when well capacities can’t keep up with crop water demand. In the high ET demanding environments of Oklahoma, irrigated agriculture depends heavily on profile moisture storage. Declining soil profile moisture is common during peak ET periods in high water demanding crops such as corn. These observations are useful if one starts the season with considerable moisture in the soil profile, however such trends may be absent if the season is started with a dry soil profile. Dry soil profiles can be recharged early in the season with pre-irrigation or deeper early irrigations (if allowed by the infiltration rate of the soil), when crop ET demand is low, to build the soil moisture profile. As such, sensors can be used in reducing the irrigation depth or skipping irrigation in early cropping systems if one starts with a full profile. This usually allows root growth through the profile to chase the moisture in deeper layers. It should be noted that the roots will grow and chase moisture only if there is a wet profile, and not through a dry soil profile.

Sensor installation and calibration are important for efficient use of these devices in irrigation decision making. Poor installation can often lead to poor data and wrong decision making. Although modern sensors are self/factory calibrated, some do provide the option to adjust threshold levels manually based on field observations. Early installation of sensors can be useful in making informed decisions as soon as the season starts. For a more detailed analysis of proper sensor installation, refer to BAE-1543. Producers are encouraged to integrate other means of irrigation planning with soil moisture sensing, such as a push rob to probe the soil profile or OSU Mesonet’s irrigation planner to further validate the sensor data. Further, the cliente should consider their irrigation capacities before investing in soil moisture sensors, as sensors may always show a deficit in low well capacities which cannot meet crop’s water demand.

References:

Taghvaeian, S., D. Porter, J. Aguilar. 20221. Soil moisture-sensing systems for improving irrigation scheduling. BAE-1543. Oklahoma State Cooperative Extension. Available at: https://extension.okstate.edu/fact-sheets/soil-moisture-sensing-systems-for-improving-irrigation-scheduling.html

Datta, S., S. Taghvaeian, J. Stivers. Understanding soil water content and thresholds for irrigation management. BAE-1537. Oklahoma State Cooperative Extension. Available at: https://extension.okstate.edu/fact-sheets/understanding-soil-water-content-and-thresholds-for-irrigation-management.html

For more information please contact Sumit Sharma sumit.sharma@okstate.edu

Toto, I’ve a feeling we’re not in Kansas anymore. Double Cropping, Orange edition

It has been pointed out that the blog https://osunpk.com/2025/06/09/double-crop-options-after-wheat-ksu-edition/ had a significant Purple Haze. And I should have added the Oklahoma caveat. So Dr. Lofton has provided his take on DC corn in Oklahoma.

Double-crop Corn: An Oklahoma Perspective.

Dr. Josh Lofton, Cropping Systems Specialist.

Several weeks ago, a blog was published discussing double-crop options with a specific focus on Kansas. I wanted to address one part of that blog with a greater focus on Oklahoma, and that section would be the viability of double-crop corn as an option.

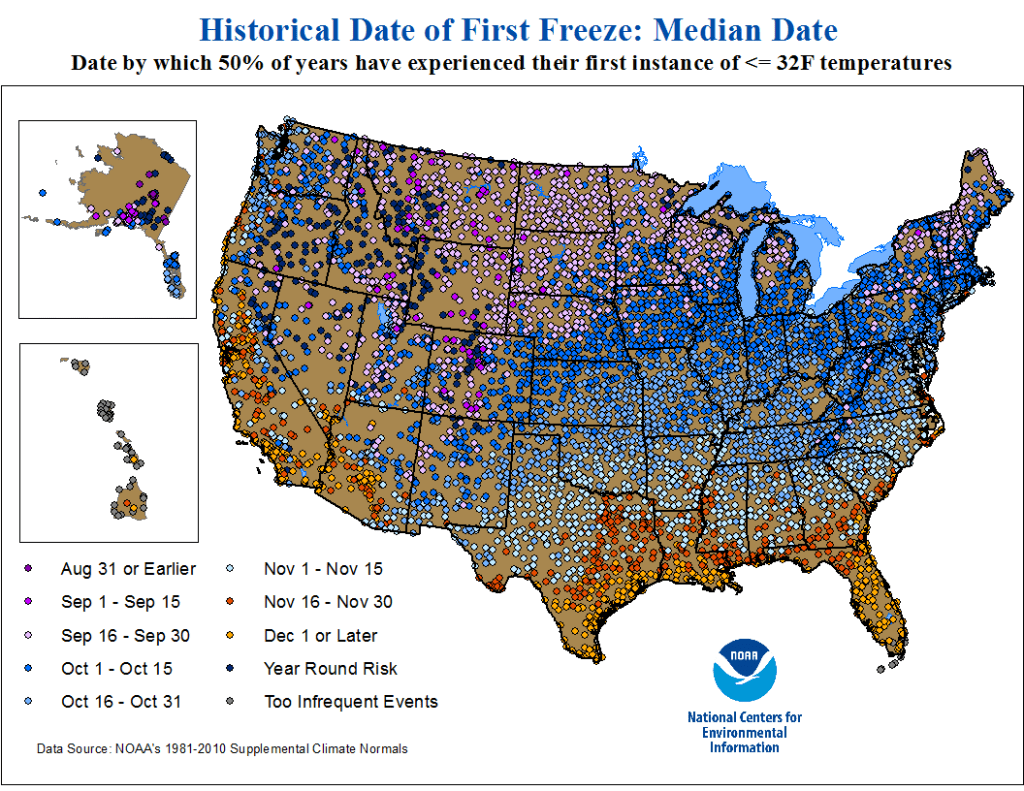

Double-crop farming is considered a high-risk, high-reward system to try. Establishing a crop during the hottest and often driest parts of summer can present challenges that need to be overcome. Double-crop corn faces these same challenges and, in some seasons, even more. However, it is definitely a system that can work in Oklahoma, especially farther south. If you look at that original blog post, one of the main challenges discussed is having enough heat units before the first frost. When examining historic data, like those below from NOAA, the first potential frost date for Northcentral and Northwest Oklahoma may be as early as the first 15 days of October but more often will be in the last 15 days of October. In Southwest and Central Oklahoma, this date shifts even later to the first 15 days of November. This is later than Kansas, especially northern Kansas, which has a much higher chance of experiencing an early October freeze. I do not want to downplay this risk; however, it is one of the biggest risks growers face with this system, and a later fall freeze would greatly benefit it. We have been conducting trials near Stillwater for the past five years on double-crop corn and have only failed the crop once due to an early freeze event. But in that year, both double-crop soybean and sorghum also did not perform well.

The main advantage of double-crop corn is that if you miss the early season window, it offers the best chance for the crop to reach pollination and early grain fill without the stress of the hottest and driest part of the year. Therefore, careful management is crucial to ensure this benefit isn’t lost. In Oklahoma, we have two systems that can support double-crop corn. In more central and southwest Oklahoma, especially under irrigation, farmers can plant corn soon after wheat harvest, similar to other double-crop systems. This planting window helps minimize the impact of Southern Rust, which can significantly reduce yields in some years, and may reduce the need for extensive management. This earlier planting window is often supported by irrigation, enabling the crop to endure the hotter, drier late July and early August periods. Conversely, in northern Oklahoma, planting often occurs in July to allow pollination and grain fill (usually 30-45 days after emergence) to happen in late August and early September. During this period, the chances of rainfall and cooler nighttime temperatures increase, both of which are critical for successful corn production.

Other management considerations include maturity. Based on initial testing in Oklahoma, particularly in the northern areas, we prefer to plant longer-maturity corn. Early corn varieties have a better chance of maturing before a potential early freeze but also carry a higher risk of undergoing critical reproduction stages (pollination and early grain fill) during hot, dry periods in late summer. Testing indicates that corn with a maturity of over 110 days often works well for this. However, this does not mean growers cannot plant shorter-season corn, especially if the season has generally been cooler, though the risk still exists depending on how quickly the crop can grow. Based on testing within the state, the dryland double-crop corn system typically does not require adjustments to other management practices, such as seeding rates or nitrogen application. Because of the need to coordinate leaf architecture and manage limited water resources, higher seeding rates are not recommended. Maintaining current nitrogen levels allows the crop to develop a full canopy.

The final question often comes as; how does it yield? This will depend greatly. Corn looks very good this year across that state, especially what was able to be planted earlier in the spring. However, in recent years, delaying even a couple of weeks beyond traditional planting windows has lowered yields enough that double-crop yields are often similar. We have often harvested between 50-120 bushels per acre in our plots around Stillwater with double-crop systems. So, the yield potential is still there.

In the end, Oklahoma growers know that double-crop is a risk regardless of the crop chosen. There are additional risks for double-crop corn, such as Southern Rust in the south and freeze dates in the north. This risk is increased by the presence of Corn Leaf Aphid and Corn Stunt last season, and it is not clear if these will be ongoing problems. Therefore, growers need to be careful not to expect too much or to invest too heavily in inputs that may not be recoverable if there is a loss. One silver lining is that if double-crop corn doesn’t succeed in any given year, growers can still use it as forage and recover at least some of their costs.

Any questions or concerns reach out to Dr. Lofton: josh.lofton@okstate.edu

Army Worms are Marching!!!!

This article by Brian Pugh (new OSU State Forage Specialist) just came across my desk today in perfect timing as yesterday I saw significant army worm feeding on the crabgrass in my lawn, and not to mention the 20+ caterpillars on my sidewalk. So while Brian is noting Eastern Ok, Id say we are at thresholds in Payne Co also. And no, we don’t need to discuss that my lawn as more crabgrass than Bermuda.

Fall Armyworms Have Arrived In Oklahoma Pastures and Hayfields

Brian C. Pugh, Forage Extension Specialist

Fall armyworms (FAW) are caterpillars that directly damage Bermudagrass and other introduced forage pastures, seedling wheat, soybean and residential lawns. There have been widespread reports of FAW buildups across East Central and Northeast Oklahoma in the first two weeks of July. Current locations exceeding thresholds for control are Pittsburg, McIntosh and Rogers counties.

Female FAW moths lay up to 1000 eggs over several nights on grasses or other plants. Within a few days, the eggs hatch and the caterpillars begin feeding. Caterpillars molt six times before becoming mature, increasing in size after each molt (instars). The first instar is the caterpillar just after it hatches. A second instar is the caterpillar after it has shed its skin for the first time. A sixth instar has shed its skin five times and will feed, bury itself in the soil, and pupate. The adult moth will emerge from the pupa in two weeks and begin the egg laying process again after a suitable host plant is found. Newly hatched larvae are white, yellow, or light green and darken as they mature. Mature FAW measure 1½ inches long with a body color that ranges from green, to brown to black.

Large variation in color is normal and shouldn’t be used alone as an identifying characteristic. They can most accurately be distinguished by the presence of a prominent inverted white “y” on their head. However, infestations need to be detected long before they become large caterpillars. Small larvae do not eat through the leaf tissue, but instead, scrape off all the green tissue and leave a clear membrane that gives the leaf a “window pane” appearance. Larger larvae however, feed voraciously and can completely consume leaf tissue.

FAW are “selective grazers” and tend to select the most palatable species of forages on any given site to lay eggs for young larvae to begin feeding. The caterpillars also tend to feed on the upper parts of the plant first which are younger and lower in fiber content. Forage stands that are lush due to fertility applications are often attacked first and should be scouted more frequently.

To scout for FAW, plants from several locations within the field or pasture need to be examined. Examine plants along the field margin as well as in the interior. Look for “window paned” leaves and count all sizes of larvae. OSU suggests a treatment threshold is two or three ½ inch-long larvae per linear foot in wheat and three or four ½ inch-long larvae per square foot in pasture. An easy-to-use scouting aid can be made for pasture by bending a wire coat hanger into a hoop and counting FAW in the hoop. The hoop covers about 2/3 of a square foot, so a threshold in pasture would be an average of two or three ½ inch-long larvae per hoop sample. An excellent indicator plant in forage stands is Broadleaf Signalgrass (seen in the foreground of the hay bale picture). Broadleaf Signalgrass tends to be preferentially selected by female moths and is one of the first species that window paned tissue is observed during the onset of an infestation.

Approximately 70% of the forage consumed during an armyworm’s lifetime occurs in the final instar before pupating into a moth. This indicates that control measures should focus on small instar caterpillars (1/2 inch or less) before forage loss increases exponentially. Additionally, small larvae are much more susceptible to insecticide control than larger caterpillars.

Remember, FAW are actively reproducing up until a good killing frost, so don’t let your guard down. If you think you have an infestation of fall armyworm please contact your local County Extension Educator. Additionally, before considering chemical control consult your Educator for insecticide recommendations labeled for forage use.

For more information or insecticide options consult:

Oklahoma State University factsheet:

CR-7193, Management of Insect Pests in Rangeland and Pasture

https://extension.okstate.edu/fact-sheets/management-of-insect-pests-in-rangeland-and-pasture.html

Meet the Aster Leafhopper and Learn How to Distinguish it from the Corn Leafhopper

Ashleigh M. Faris: Extension Cropping Systems Entomologist, IPM Coordinator

Release Date June 3 2025

Last year’s corn stunt disease outbreak, caused by the corn leafhopper transmitting pathogens associated with corn stunt disease, has been on everyone’s minds. Over the past few weeks, I’ve received several calls from growers, crop consultants, and industry partners concerned about leafhoppers in corn. Fortunately, none have been corn leafhoppers, the vast majority have instead been aster leafhoppers. So far, no corn leafhoppers have been reported north of central Texas. Oklahoma did not have any reports of overwintering corn leafhoppers so if we have the insect this year it will need to migrate northward from where it currently resides. For a refresher on the corn leafhopper and corn stunt disease, check out these two previously posted OSU Pest e-Alerts: EPP-25-3and EPP-23-17.

Leafhoppers in general are insects that we have had for many years in our row and field crops. But we likely did not pay attention to them or notice them until this past year due to our heightened awareness of their existence thanks to the corn leafhopper and corn stunt disease. Below is guidance on how to distinguish between the corn leafhopper and aster leafhopper. Remember, if the corn leafhopper is detected in the state, OSU Extension will notify growers, consultants, and industry partners through Pest e-Alerts and our social media channels.

Aster Leafhopper Overview

The aster leafhopper (aka six spotted leafhopper), Macrosteles quadrilineatus, is native to North America and can be found in every U.S. state, as well as Canada. This polyphagous insect feeds on over 300 host plant species including weeds, vegetables, and cereals. Like many other leafhoppers, the aster leafhopper can be a vector of pathogens that cause disease, but corn stunt is not one of them. Instead, aster leafhoppers cause problems in traditional vegetable growing operations, as well as floral production. There is currently no concern for this insect being a vector of disease in row or field crops, including corn. Check out the OSU Pest a-Alert EPP-23-1to learn more about this insect and aster yellows disease.

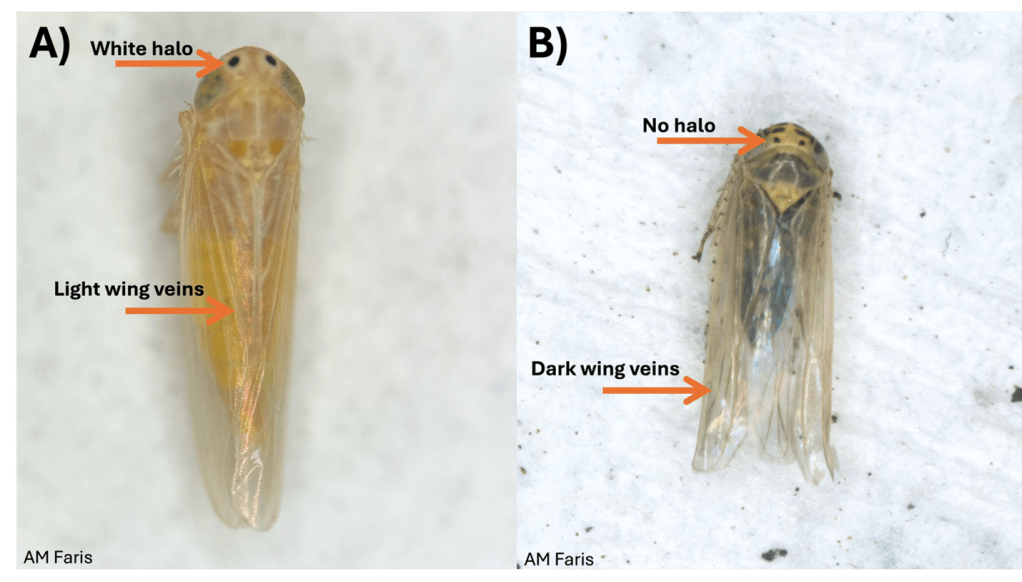

Aster Leafhopper versus Corn Leafhopper

The corn leafhopper (Photo 1A) and the aster leafhopper, as well as many other leafhopper species have two black dots located between the eyes of the insect (Photo 1). Aster leafhopper adults are 0.125 inches (3 mm) long, with transparent wings that bear strong veins, and darkly colored abdomens (Photo 1B). Their dark abdomen can cause the aster leafhopper to appear grey when you see them in the field. Their long wings can also make the insect appear to have a similar appearance to the corn leafhopper (Dalbulus maidis) (Photo 1).

Characteristics that differentiate the corn leafhopper from the aster leafhopper are as follows. When viewed from above (dorsally): 1) the corn leafhopper’s dots between the eyes have a white halo around them and the aster leafhopper’s dots between eyes lack the white halo and 2) the corn leafhopper has lighter/finer wing veination than the aster leafhopper (Photo 1). When when viewed from their underside (ventrally) 3) the corn leafhopper lacks markings on their face whereas the aster leafhopper has lines/spot on the face and 4) the abdomen of the corn leafhopper lacks the dark coloration of the aster leafhopper (Photo 2).

Confirming Corn Leafhopper Identification

It is important to note that many insects will have their cuticle darken as they age. This, along with there being light and dark morphs of many insects can lend to additional confusion when distinguishing one species from another. If you believe that you have a corn leafhopper then you need to collect the insect and send it to a trained entomologist that can verify the identity of the insect under the microscope. Leafhoppers in general are fast moving insects but they can be collected in an insect net or using a handheld vacuum (see EPP-25-3). You can submit samples to the OSU Plant Disease and Insect Diagnostic Lab.

Please feel free to reach out to OSU Cropping Systems Extension Entomologist Dr. Ashleigh Faris with any questions or concerns. @ ashleigh.faris@okstate.edu

PRE-EMERGENT RESIDUAL HERBICIDE ACTIVITY ON SOYBEANS, 2025

Liberty Galvin, Weed Science Specialist

Karina Beneton, Weed Science Graduate Student.

Objective

Determine the duration of residual weed control in soybean systems following the application of Preemergent (PRE) herbicides when applied alone and in tank-mix combination.

Why we are doing the research

PRE herbicides offer an effective means of suppressing early-season weed emergence, thereby minimizing competition during the critical early growth stage. However, evolving herbicide resistance and the need for longer-lasting weed suppression underscore the importance of evaluating multiple modes of action and their residual properties alone and tank-mixed.

Field application experimental design and methods

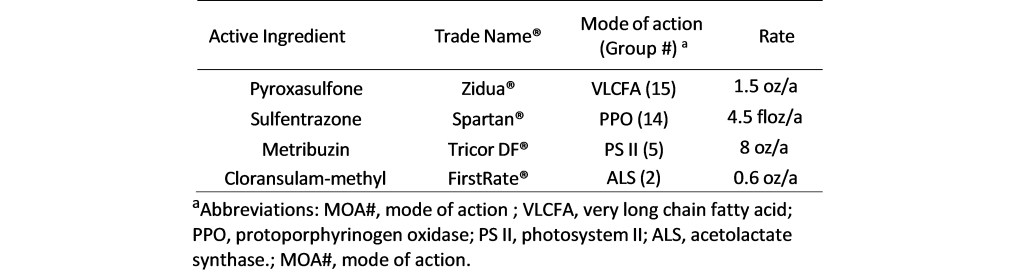

Field experiments were conducted in 2022, 2023, and 2024 growing seasons in Bixby, Lane, and Ft. Cobb FRSU Research Stations across Oklahoma. Each herbicide (listed in Table 1) was tested individually, in 2-way combinations, 3-way mixtures, and finally as 4-way combinations that included all active ingredients listed at the label rate.

Soybeans were planted at rates between 116,000 and 139,000 seeds/acre from late May to early June, depending on the year and location. The variety used belongs to the indeterminate mid- maturity group IV, with traits conferring tolerance to glyphosate (group 9 mode of action), glufosinate (group 10), and dicamba (group 4). Not all soybean varieties have metribuzin tolerance. Please read the herbicide label and consult your seed dealer for acquiring tolerant varieties. Row spacing was 76 cm at Bixby and Lane, and 91 cm at Fort Cobb. PRE treatments were applied immediately after planting at each experimental location.

POST applications consisted of a tank-mix of dicamba (XtendiMax VG® – 22 floz/acre), glyphosate (Roundup PowerMax 3®- 30 floz/acre), S-metolachlor (Dual II Magnum® – 16 floz/acre), and potassium carbonate (Sentris® – 18 floz/acre). Applications were made on different dates, mostly after the first 3 weeks following PRE treatments. These timings were based on visual weed control ratings, particularly for herbicides applied alone or in 2-way combinations, which showed less than 80% control at those early evaluation dates. The need for POST applications also depended on the species present at each site, with most fields being dominated by pigweed, as illustrated in the figure below.

Results

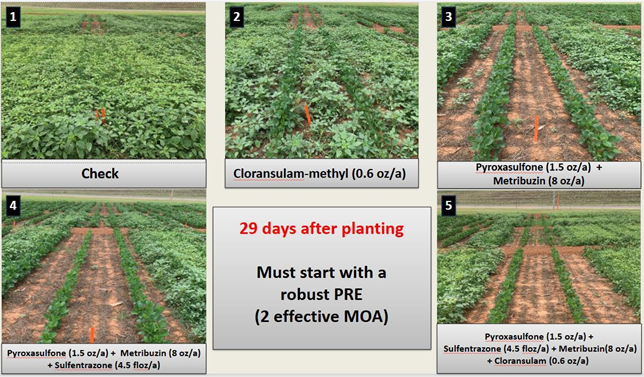

Tank-mixed PRE herbicide combinations generally provided superior residual control compared to a single mode of action application (Shown in Figure 1). Timely post-emergent (POST) herbicide applications helped sustain high levels of weed suppression, particularly as the effectiveness of residual PRE declined.

Residual control of tank-mixed PRE

Some herbicides applied alone or in simple 2-way mixes, such as sulfentrazone + chloransulam- methyl and pyroxasulfone + chloransulam-methyl required POST applications within 20 to 29 days after PRE, indicating moderate residual control.

In contrast, 2-way combinations containing metribuzin, such as sulfentrazone + metribuzin and pyroxasulfone + metribuzin, extended control up to 50 days after PRE in some cases, highlighting metribuzin’s importance even in less complex formulations.

Furthermore, 3-way and 4-way combinations including metribuzin provided the longest-lasting control, delaying POST applications up to 51–55 days after PRE.

Injury of specific weeds

Palmer amaranth (Amaranthus palmeri) control in Bixby was consistently high (≥90%) at 2 weeks after PRE in 2022 and 2024 across all treatments. At 4 WAPRE, treatments containing metribuzin alone or in combination maintained strong control (90% or greater).

Texas millet (i.e., panicum; Urochloa texana) and large crabgrass (Digitaria sanguinalis) were effectively managed with most treatments delivering over 90% control early in the season and maintaining performance throughout. In 2024, control remained generally effective, though pyroxasulfone alone showed a temporary lack of control for Texas millet, and single applications declined in effectiveness against large crabgrass later in the season. These reductions were likely due to continuous emergence and the natural decline in residual herbicide activity due to weather. The most consistent late-season control for both species came from 3- and 4-way herbicide combinations.

Morningglory (Ipomoea purpurea) control reached full effectiveness (100%) only when POST herbicides were applied, across all years and locations. Their late emergence beyond the residual window of PRE herbicides reinforces the importance of sequential herbicide applications for season-long control.

Take home messages:

- Incorporating PRE and POST herbicides slows the rate of herbicide resistance

- Tank mixing with *different modes of action* ensures greater weed control by having activity on multiple metabolic pathways within the plant.

- Tank mixing with PRE herbicides could reduce the number of POST applications required, and

- Provides POST application flexibility due to residual of PRE application

For additional information, please contact Liberty Galvin at 405-334-7676 | LBGALVIN@OKSTATE.EDU or your Area Agronomist extension specialist.

Laboratory evaluation of Liquid Calcium

Liquid calcium products have been around for a long time. The vast majority of these products are either a calcium chloride or chelated calcium base which is now commonly found with the addition of a humic acid, microbial, or micronutrient. Many of these make promises such as “raises your soil pH with natural, regenerative, liquid calcium fertilizers that correct soil pH quickly, efficiently, and affordably!”. From a soil chemistry aspect the promise of adding 3 to 5 gallons of a Ca solution, which is approximately 10% Ca, will raise the soil pH is impossible on a mass balance approach. In this I mean that to increase the pH of an acid soil {soil pH is the ratio of hydrogen (H) and hydroxide (OH) in the soil, and having an acid soil means the concentration of H is greater than that of OH} requires a significant portion of the H+ that is in solution and on soil particle to be converted to OH, or removed from the system entirely.

The blog below walks through the full chemical process of liming a soil but in essence to reduce the H+ concentration we add a cation (positively charged ion) such as Ca or magnesium (Mg) which will kick the H+ of the soil particle and a oxygen (O) donator such as CO2 with ag lime or (OH)2 which is in hydrated lime. Each of these O’s will react with two H’s to make water. And with that the pH increases.

However regardless of the chemistry, there is always a lot of discussion around the use of liquid calcium Therefore we decided to dig into the question with both field and laboratory testing. This blog will walk through the lab portion.

This was a laboratory incubation study. The objective was to evaluation the impact of the liquid Ca product (LiqCa**) on the soil pH, buffer capacity, Ca content and CEC of two acidic soils. LiqCa was applied at three rates to 500 g of soil. The three rates were equivalent to 2, 4, and 6 gallon per acre applied on a 6” acre furrow slice of soil. One none treated check and two comparative products were also applied. HydrateLime (CaO) as applied at rate of Ca equivalent to the amount of Ca applied via LiqCa, which was approximately 1.19 pounds of Ca per acre. Also AgLime (CaCO3) was applied at rates equivalent to 1, 2, and 4 ton effective calcium carbonate equivalency (ECCE). The Ag lime used in the study had a measured ECCE of 92%. The two soils selected for both acidic but had differing soil textures and buffering capacities. The first LCB, had an initial soil pH (1:1 H2O) of 5.3 and a texture of silty clay loam and Perkins had a initial pH of 5.8 and is a sandy loam texture. Both soils had been previously collected, dried, ground, and homogenized. In total 10 treatments were tested across two soils with four replications per treatment and soil.

Project protocol, which has been used to determined site specific liming and acidification rates, was to apply the treatments to 500 grams of soil. Then for a period of eight weeks this soil wetted and mixed to a point of 50% field capacity once a week then allowed to airdry and be mixed again. At the initiation and every two weeks after soil pH was recorded from each treatment. The expectation is that soil pH levels will change as the liming products are impacting the system and at some point, the pH reaches equilibrium and no longer changes. In this soil that point was week six however the trail was continued to week eight for confirmation. See Figures 1 and 2.

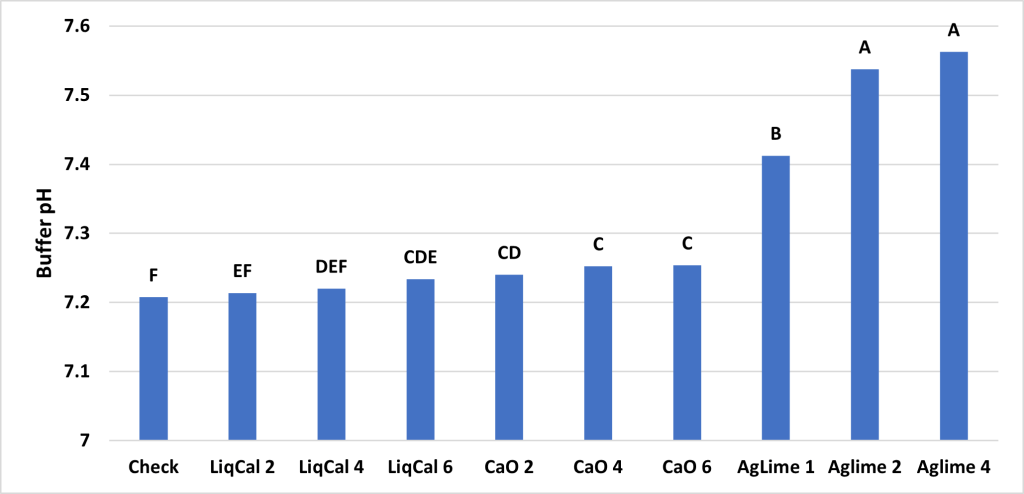

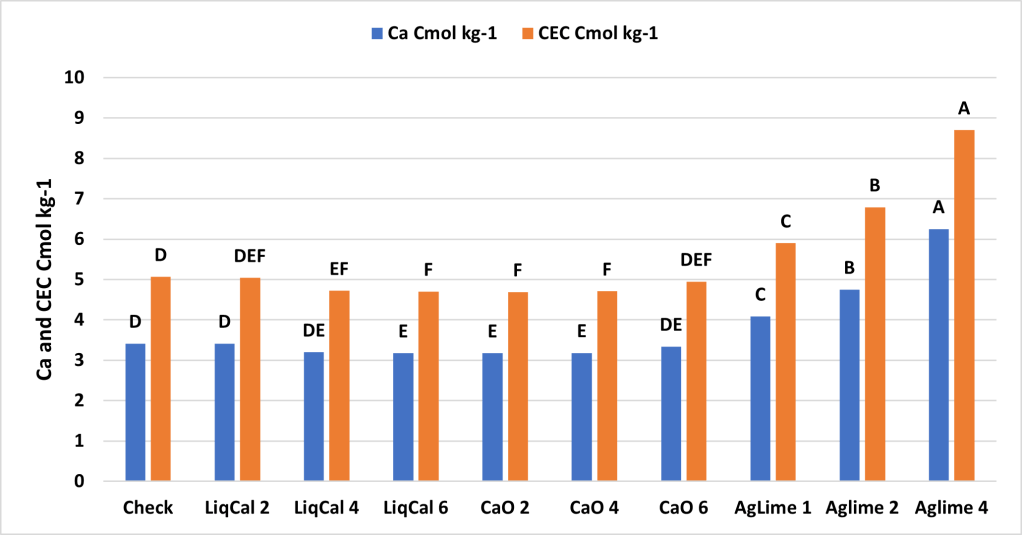

ANOVA Main effect analysis showed that Soil was not a significant effect so therefore both soils were combined for further analysis. Figure 3 shows the final soil pH of the treatments with letters above bars representing significance between treatments. In this study all treatments were significantly greater than the check with exception of LiqCal 2 and CaO 6. Neither LiqCal or CaO treatments reached the pH level of Aglime, regardless of rate.

Summary

The incubation study showed that application of LiqCal at a rate of 4 and 6 gallons per acre did significantly increase the soil pH by 0.1 pH units and 6 gallons per acre increased the Buffer index above the check by 0.03 units. Showing the application of LiqCal did impact the soil. However the application of 1 ton of Ag lime resulted in significantly great increase in soil pH, 1.0 units by 8 weeks and a buffer index change of 0.2 units. The Aglime 1 was statistically greatly than all LiqCal treatments. Ag lime 2 and 4 were both statistically greater than Ag lime 1 with increasing N rate with increasing lime rate. Given the active ingredient listed in LiqCal is CaCl, this result is not unexpected. Ag lime changes pH by the function of CO3 reacting H+ in large quantities. In a unsupported effort a titration was performed on LiqCal, which show the solution was buffered against pH change. However it was estimated that a application of approximately 500 gallons per acre would be needed to sufficiently change the soil pH within a 0-6” zone of soil.

Results of the field study.

https://osunpk.com/2025/06/02/field-evaluation-of-lime-and-calcium-sources-impact-on-acidity/

Take Home

The application of a liquid calcium will add both calcium and chloride which are plant essential nutrients and can be deficient. In a soil or environment suffering from Cl deficiency specifically I would expect an agronomic response. However this study suggest there is no benefit to soil acidity or CEC with the application rates utilized (2, 4, and 6 gallon per acre).

** LiqCal The product evaluated was derived from calcium chloride. It should be noted that since the completion of the study this specific product used has changed its formulation to a calcium chelate. This change however would not be expected to change the results as the experiment did include a equivalent calcium rate of calcium oxide.

Other articles of Interest

https://extension.psu.edu/beware-of-liquid-calcium-products

https://foragefax.tamu.edu/liquid-calcium-a-substitute-for-what/

Any questions or comments feel free to contact me. b.arnall@okstate.edu

Field evaluation of lime and calcium sources impact on Acidity.

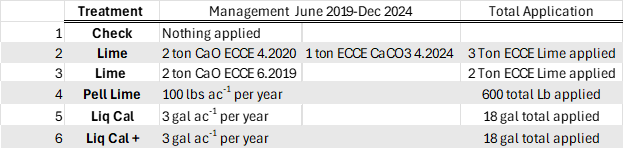

At the same time we initiated a lab study looking at the application of LiqCal https://osunpk.com/?p=2096 , we also initiated a field trial to look at the multi-year application of LiqCal, Pelletized Lime and Ag-Lime.

A field study was implemented on a bermudagrass hay meadow near Stillwater in the summer of 2019. The study looked to evaluate the impact of multiple liming / calcium sources impact on forage yield and soil properties. This report will focus on the impact of treatments on soil properties while a later report will discuss the forage results.

Table 1. has the management of the six treatments we evaluated, all plots had 30 gallons of 28-0-0 streamed on each spring in May. Treatment 1 was the un-treated check. Treatment 2, was meant to be a 2 ton ECCE (Effective Calcium Carbonate Equivalency) Ag Lime application when we first implemented the plots in 2019, but we could not source any in time so we applied 2.0 ton ECCE hydrated lime (CaO) the next spring. The spring 2023 soil samples showed the pH to have fallen below 5.8 so and Ag lime was sourced from a local quarry and 1.0 ton ECCE was applied May 2024. Treatment 3, was meant to complement Treatment 2 as an additional lime source of hydrated lime, it was applied June 2019. My project has used hydrated lime as a source for many years as it is fast acting and works great for research. Treatment 4 had 100 lbs. of pelletized lime applied each spring. The 100 lbs. rate was based upon recommendation from a local group that sells Pell lime. Treatments 5 and 6 were two liquid calcium products *Liq Cal * and **Lig Cal+ from the same company. The difference based upon information shared by the company was the addition of humic acid in the Liq Cal+ product. Both LiqCal and LiqCal+ where applied at a rate of 3 gallons per acre per year, with 17 gallons per acre of water as a carrier. Table 1, also shows total application over the six years of the study.

After six years of applications and harvest it was decided to terminate the study. The forage results were intriguing however little differences where seen in total harvest over the six years, highlighting a scenario I have encountered in the past on older stands of bermuda. That data will be shared in a separate blog.

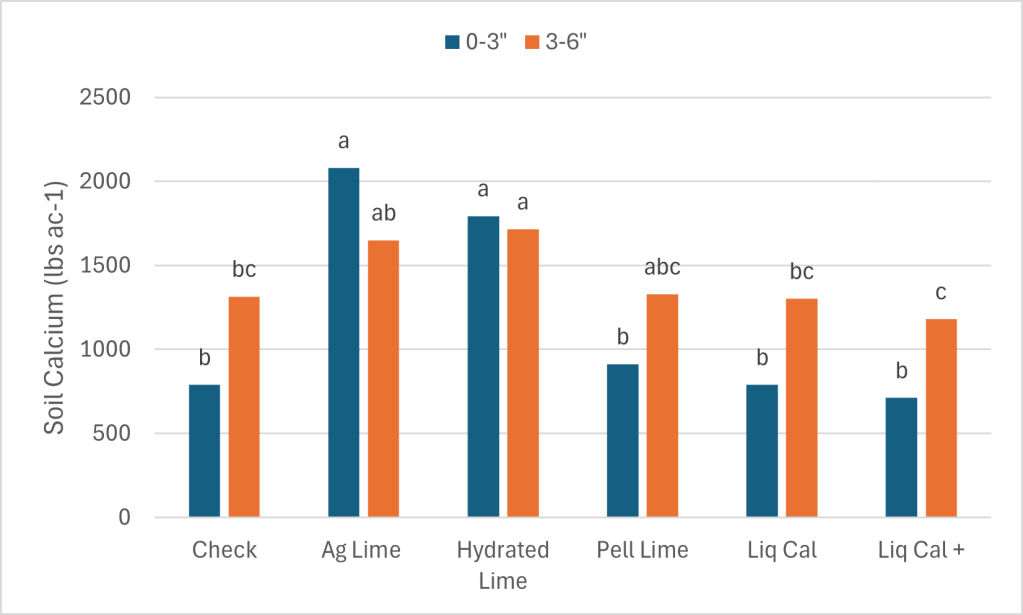

The soils data however showed exceptionally consistent results.

In February of 2025 soil samples were collected from each plot at depths of 0-3 inch’s and 0-6 inches (Table 2.). It was our interest to see if the soil was being impacted below the zone we would expect lime and calcium to move without tillage, which if 0-3″. Figure 1. below shows the soil pH of the treatments at each depth. In the surface (blue) the Ag Lime and Hydrated lime treatments both significantly increased from 4.78 to 6.13 and 5.7 respectively. While the Pel lime, LiqCal and LiqCal+ had statistically similar pH’s as the check at 4.8, 4.65, and 4.65. It is important to note that the Ag Lime applied in May of 2024 resulted in a significant increase in pH from the 2019 application of Treatment 3. The Spring of 2024 soil samples showed that the two treatments ( 2 and 3 ) were equivalent. So within one year of application the Ag lime significantly raised soil pH.

As expected the impact on the 3-6″ soil pH was less than the surface. However, the Ag Lime and Hydrated lime treatments significantly increased the pH by approximately 0.50 pH units. This is important data as the majority of the literature suggestion limited impact of lime on the soil below the 3″ depth.

The buffer pH of a soil is used to determine the amount of lime needed to change the soils pH. In Figure 2. while numeric differences can be seen, no treatment statistically impacted the buffer pH at any soil depth.

The soil calcium level was also measured. As with 0-3″ pH and Buffer pH the Ag Lime and Hydrated lime had the greatest change from the check. These treatments were not statistically greater than the Pell Lime but where higher than the LiqCal and LiqCal+.

Each value is the average of four replicates.

Take Homes

In terms of changing the soils pH or calcium concentration, as explained in the blog https://osunpk.com/2023/01/24/mechanics-of-soil-fertility-the-hows-and-whys-of-the-things/, it takes a significant addition of cations and oxygens to have an impact. This data shows that after six years of continued application of pelletized lime and two liquid calcium products the soil pH did not change. While the application of 2 ton ECCE hydrate lime did.

Also within one year of application Ag lime the soil pH significantly increased.

* LiqCal The product evaluated was derived from calcium chloride. It should be noted that since the completion of the study this specific product used has changed its formulation to a calcium chelate. This change however would not be expected to change the results as the experiment did include a equivalent calcium rate of calcium oxide.

** LiqCal+ The product evaluated was derived from calcium chloride. It should be noted that since the completion of the study this specific product used has changed its formulation. The base was changed from calcium chloride to a calcium chelate. Neither existing label showed Humic Acid as a additive, however the new label has a a list of nutrients at or below 0.02% (Mg, Zn, S, Mn, Cu, B, Fe) and Na at .032% and is advertised as having microbial enhancements.

Any questions or comments feel free to contact me. b.arnall@okstate.edu

Sorghum Nitrogen Timing

Contributors:

Josh Lofton, Cropping Systems Specialist

Brian Arnall, Precision Nutrient Specialist

This blog will bring in a three recent sorghum projects which will tie directly into past work highlighted the blogs https://osunpk.com/2022/04/07/can-grain-sorghum-wait-on-nitrogen-one-more-year-of-data/ and https://osunpk.com/2022/04/08/in-season-n-application-methods-for-sorghum/

Sorghum N management can be challenging. This is especially true as growers evaluate the input cost and associated return on investment expected for every input. Recent work at Oklahoma State University has highlighted that N applications in grain sorghum can be delayed by up to 30 days following emergence without significant yield declines. While this information is highly valuable, trials can only be run on certain environmental conditions. Changes in these conditions could alter the results enough to impact the effect delay N could have on the crop. Therefore, evaluating the physiological and phenotypic response of these delayed applications, especially with varied other agronomic management would be warranted.

One of the biggest agronomic management sorghum growers face yearly is planting rate. Growers typically increase the seeding rate in systems where specific resources, especially water, will not limit yield. At the same time, dryland growers across Oklahoma often decrease seeding rates by a large margin if adverse conditions are expected. If seeding rates are lowered in these conditions and resources are plentiful, sorghum often will develop tillers to overcome lower populations. However, if N is delayed, there is a potential that not enough resources will be available to develop these tillers, which could decrease yields.

A recent set of trials, summarized below, shows that as N is delayed, the number of tillers significantly decreases over time. Furthermore, the plant cannot overcompensate for the lower number of productive heads with significantly greater head size or grain weight.

This information shows that delaying sorghum N applications can still be a viable strategy as growers evaluate their crop’s potential and possible returns. However, delayed N applications will often result in a lower number of tillers without compensating with increased primary head size or grain weight.

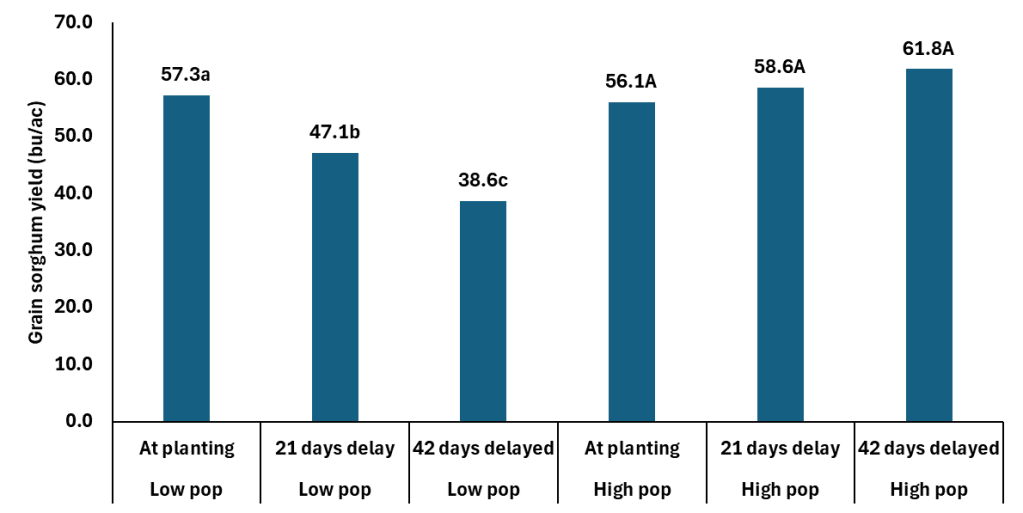

This date on yield components is really interesting when you then consider the grain yield data. The study, which is where the above yield component data came from, was looking at population by N timing. The Cropping Systems team planted 60K seeds per acre and hand thinned the stands down to 28 K (low) and 36K (high). The N was applied at planting, 21 days after emergence, and 42 days after emergence. The rate of N applied was 75 lbs N ac. It should be noted both locations were responsive to N fertilizer.

In the data you can without question see how the delayed N management is not a tool for any of members of the Low Pop Mafia. However those at what is closer to mid 30K+ there is no yield penalty and maybe a yield boost with delayed N. The extra yield is coming from the slightly heavier berries and getting more berries per head. Which is similar to what we are seeing in winter wheat. Delaying N in wheat is resulting in fewer tillers at harvest, but more berries per head with slightly heavier berries.

Now we can throw even more data into the pot from the Precision Nutrient Management Teams 2024 trials. The first trial below is a rate, time and source project where the primary source was urea applied in front of the planter for pre in range of rates from 0-180 in 30 lbs increments. Also applied pre was 90 lbs N as Super U. Then at 30 days after planted we applied 90 lbs N as urea, SuperU, UAN, and UAN + Anvol.

Pre-plant urea topped out at 150 lbs of Pre-plant (57 bushel), but it was statistically equal to 90 lbs N 51 bushel. The use of SuperU pre did not statistically increase yield but hit 56 bushel. The in-season shots of 90 lbs of UAN, statistically outperformed 90 pre and hit our highest yeilds of 63 and 62 bushel per acre. The dry sources in-season either equaled their in preplant counter parts.

The Burn Study at Perkins, showed that the N could be applied in-season through a range of methods, and still result good yields. In this study 90 lbs of N was used and applied in a range of methods. The treatments for this study was applied on a different day than the N source. Which you can see in this case the dry untreated urea did quite well when when applied over the top of sorghum. In this case we are able to get a rain in just two days. So we did get good tissue burn but quick incorporation with limited volatilization.

Take Home:

Unless working in low population scenarios. The data show that we should not be getting into any rush with sorghum and can wait until we know we have a good stand. We also have several options in terms of nitrogen sources and method of application.

Any questions or comments feel free to contact Dr. Lofton or myself

josh.lofton@okstate.edu

b.arnall@okstate.edu

Funding Provided by The Oklahoma Fertilizer Checkoff, The Oklahoma Sorghum Commission, and the National Sorghum Growers.